Intellect Pathway Solutions Ltd. (IPS) proudly highlights the groundbreaking contributions of its collaborative researcher, Jiaqi Yuan, whose recent co-authored publications are setting new standards in augmented reality displays and depth-sensing systems. These breakthroughs are poised to redefine the landscape of augmented reality (AR) and depth-sensing technologies, offering practical solutions to longstanding industry challenges. More than a technical milestone, Yuan’s work signals a profound shift in how humans will interact with digital environments.

IPS provides innovative academic and career guidance solutions, with a proven track record of supporting professionals across all career stages through partnerships with over 12 universities worldwide and over 80 successful client cases. Its team of experienced consultants includes professors from top U.S. universities and tech & finance leaders from Fortune 500 companies, bringing deep expertise in various academic fields, including Computer Science, Engineering, and emerging technologies. Among all the rising talent IPS tracks and collaborates with, Yuan's trajectory stands out.

Unlike the typical graduate student, Jiaqi Yuan defies conventional expectations. Currently pursuing dual Master's degrees at the University of Southern California—in Computer Science (2023-2025) and Electrical Engineering (2021-2022)—Yuan approaches research with a level of technical fluency and purpose rarely seen at this stage. While most students are still figuring out which simulation software to use, Yuan has been mastering the field's most sophisticated tools for over six years, such as COMSOL Multiphysics, Lumerical FDTD, and Zemax optical design systems. His research output is equally impressive: 13 journal articles, 1 patent, 4 conference papers, and 234 citations on Google Scholar.

Yet, numbers alone don’t capture the full scope of Yuan’s impact. What truly sets him apart is his ability to translate advanced scientific concepts into real-world applications—a trait IPS identifies as a hallmark of researchers who drive meaningful change across industries.

Paper One: Solving AR's Eye Strain Problem Once and for All

Anyone who has spent time with AR headsets knows the drill after ten or fifteen minutes, as your eyes start protesting. This phenomenon, known as vergence-accommodation conflict (VAC), occurs when the eyes attempt to focus on virtual objects that don't actually exist at the depth they appear to be at. It's like your brain is constantly being lied to about where things are in space.

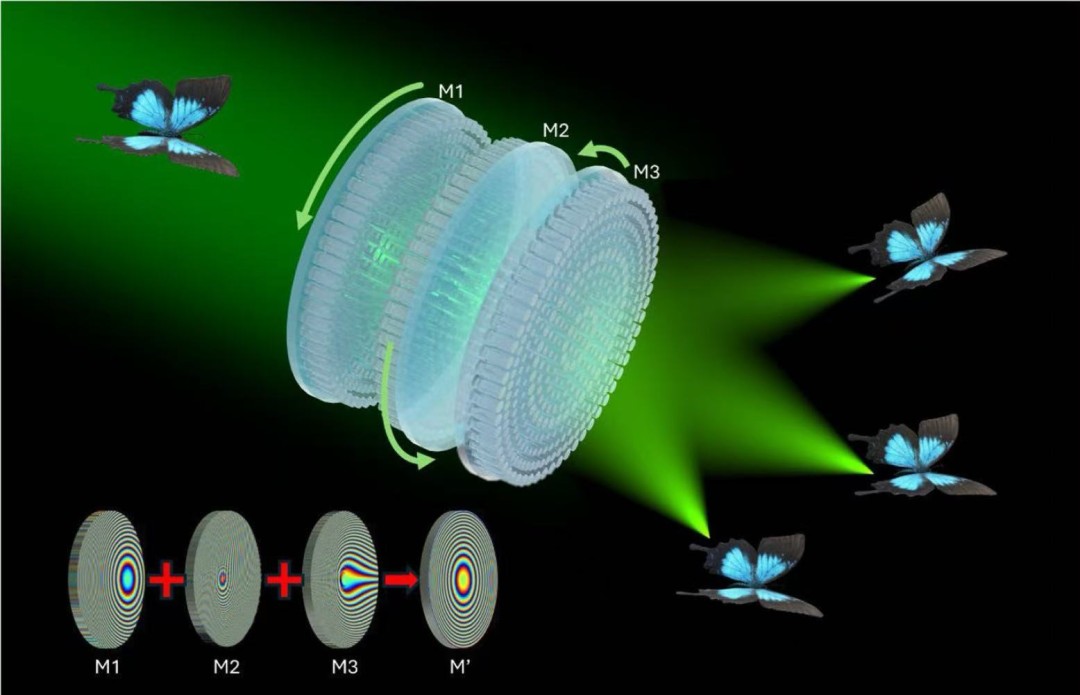

Yuan's breakthrough, published in PhotoniX this year as co-first author, tackles this head-on with what he calls a "three-dimensional varifocal meta-device." The name might sound intimidating, but the concept is elegant: three ultra-thin surfaces covered in precisely arranged titanium dioxide nanopillars that can manipulate light in ways that would seem impossible just a few years ago.

What makes this innovation truly revolutionary is that no previous metasurface technology in AR displays has managed to create dynamically adjustable focal points in three-dimensional space. Think of it as giving AR systems the ability to instantly adjust focus not just for distance, but for any point in 3D space around you—like having glasses that automatically adjust their prescription hundreds of times per second.

The technical specifications are impressive. The meta-device achieves an effective focal length ranging from 3.7 mm to 33.2 mm and can adjust the lateral focal point within the same range, with a dynamic eyebox size varying from 4.2 mm to 5.8 mm. But what this really means is virtual objects that appear at their actual depth, eliminating the eye strain that's been holding back AR adoption.

Yuan's practical approach shines through in the design choices. While competitors chase complex holographic systems requiring potentially hazardous laser illumination, his device works with simple LED light sources. The polarization-insensitive design, utilizing TiO₂ nanopillars, enhances system versatility while maintaining a compact enough size for actual wearable devices. It's the difference between a fascinating lab demonstration and something you might actually want to wear.

Paper Two: Teaching Machines to See Like We Do

Another accomplishment Yuan was involved in at Dr. Din Ping Tsai’s lab, published in Advanced Materials, tackles an equally challenging problem: how to equip machines with human-like depth perception that functions reliably regardless of lighting conditions. His solution is beautifully engineered—a dual-mode achromatic meta-lens array comprising 3,000 individual lenses with 33 million nano-antennas, fabricated from gallium nitride using advanced techniques like MOCVD and electron-beam lithography.

The system's intelligence lies in its adaptability. It can intelligently perceive the depth variation in either bright or dark conditions. It is essentially combining human vision with echolocation in one compact package.

However, the real innovation stems from the integration of custom neural networks—Light-Field Net and Structured-Light Net—along with an Adaptive Edge-Preserving Filter. The result is depth mapping accurately to within half a centimeter from 21.0 cm to 50.5 cm, with a particularly impressive ability to resolve optical illusions by accurately detecting depths of 2D objects that appear three-dimensional.

This innovation extends far beyond academic achievement. Autonomous vehicles desperately need this kind of reliable depth perception. AR systems need to understand the real world to overlay virtual objects convincingly. Even consumer applications, such as smartphone portrait mode photography, could benefit from this level of sophisticated depth understanding.

Why This Work Matters Beyond Academic Circles

According to forecasts, by 2025, the global market for augmented reality will be worth $198 billion. Yet, it remains one of the least developed, mainly because of some aspects that hamper widespread use and acceptance, like the discomfort that comes with its use. Yuan's varifocal screen prototype is aimed primarily at removing the main barrier on the road to mass adoption, AR headsets, which usually require large amounts of attention and time.

The use of it extends beyond fun and games. Take, for example, the images of surgeons engaging in augmented reality on an overlay of difficult operations; with the ill-equipped instruments, this perfectly enlarges the eyes and makes them tire out, restricting the level of efficiency of a long procedure. In contrast, the AR guides used in trucks or forklifts automatically fit the distance to where the employees are working. Yuan's technology moves these occurrences from mental images that you have conjured up into physically real entities that happen simultaneously with you.

The fact that his breakthrough deep perception invention will be applied in self-driving cars or robots and other fields that heavily rely on 3D spatial problems gives him the hope of benefiting other industries. This icon is particularly suited for applications where motion time-of-light systems are likely to fail, owing to their low power consumption, high precision, and continuous operation demands.

The Convergence Strategy

The research portfolio's core aspect revolves around the interaction of two cutting-edge technologies, which not only adds to the significance but also strengthens it with the arsenal of expertise. At the heart of the program for varifocal AR display is light manipulation at the nano level. Deep perspective algorithms, including advanced optical technology, are now employing contemporary AI methods, facilitating 3D spatial analysis.

This strategic intersection signals the dawn of an era that produces integrated solutions capable of functioning with many industries at the same time. Envision a set of high-tech AR goggles, equipped with the latest focus adjustment technology and instantaneous depth perception capabilities, resulting in unparalleled realism and safety that only precision engineering can provide—embedding both display and measuring functions within a single device. Consider augmented reality surgical systems that are coupled with AI-enhanced depth perception technology that responds in real-time to produce a superhuman level of accuracy, resulting in minimal errors.

Jiaqi Yuan is a name to watch for anyone working at the nexus of optics, artificial intelligence, and practical innovation. His work is not just pushing scientific boundaries—it is laying the foundation for technologies that will seamlessly integrate into our everyday lives. From laboratories to global markets, his trajectory points toward a future where today's research becomes tomorrow’s essential tools.

Media Contact

Company Name: Intellect Pathway Solutions Ltd.

Contact Person: Cris Edward

Email: Send Email

Country: China

Website: https://www.intellectpaths.com/